- The Scholarly Letter

- Posts

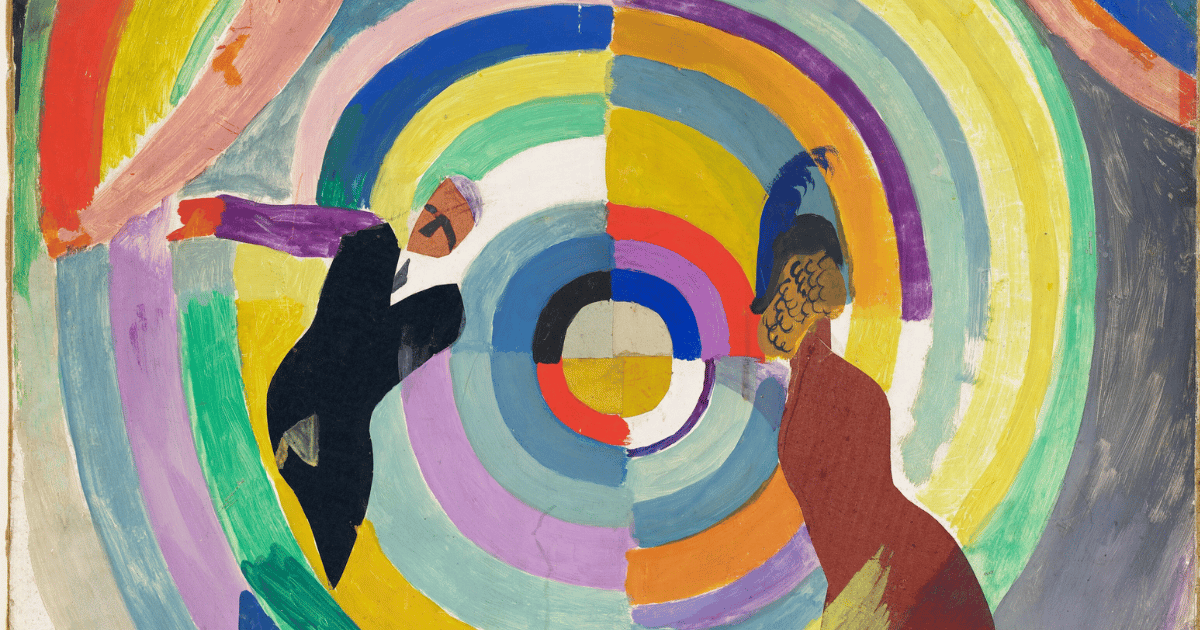

- Doughnut Shaped Scholarship

Doughnut Shaped Scholarship

And yet, this framing of quality science has rarely been taken up in earnest, particularly in how science is evaluated or valued in broader society. The dominant understanding of “quality” science – especially in public and policy discourse – is

🍏your Thursday Essay 31st July, 2025

A well-researched original piece to get you thinking.

Was this newsletter forwarded to you? Sign up receive weekly letters rooted in curiosity, care and connection.

Know someone who will enjoy The Scholarly Letter? Forward it to them.

All previous editions of The Scholarly Letter are available on our website.

Hi Scholar,

This was a difficult essay to write. Some of the ideas presented – especially in the first half – may also be difficult to read, particularly at a time when the importance and value of science are under attack, most notably by the Trump administration in the United States.

As much as we Scholars might want to defend science as infallible, the reality is that it isn’t perfect. Like all systems, the scientific system has flaws with parts that don’t work well: things that could, and should, be improved. To admit this is difficult at the best of times. In the face of what we perceive as systematic efforts to undermine science, it can feel nearly impossible.

But however strongly we may feel compelled to defend the status quo, it’s important to resist becoming dogmatic. To worry about the state of our scientific system is to show our care for it. Efforts to critique can be rooted in love. As such, this essay is a critique, yes, but it is, above all, an act of love.

Doughnut Shaped Science

- Written by The Tatler

I stumbled across this remarkable graph during the research period for the essay On Scholar Led Knowledge Creation.

This Letter is for Paid Subscribers

Become a paid subscriber to read the rest of this Essay and access our full archive.

Already a paying subscriber? Sign In.

Paid subscribers receive an edition of The Scholarly Letter every Thursday: :

- • Two editions of 🍎The Digest every month

- • Two editions of 🍏 The Thursday Essay